Search engine rankings are a winner-takes-all game. The top three results have click-through rates of 30% – 10%; by position nine, that’s dropped to just 2%. If your website doesn’t appear on page one for relevant search terms, it’s not reaching its full potential.

That’s where search engine optimization (SEO) comes in.

What is search engine optimization (SEO)?

SEO is the blanket term covering everything brands do to get organic traffic from search engines to their websites. SEO involves technical aspects like site architecture, as well as more imaginative elements like content creation and user experience.

Sounds relatively simple. But SEO is a moving target, even for experienced digital marketers. Myths abound, algorithms change, and SEO tactics that once worked can suddenly get you a penalty. To keep a site optimized, you need deep knowledge of how search engines “think,” as well as how real people think and react with your web content. This mix of usability, site architecture, and content creation makes search engine optimization feel like a hybrid.

How Search Engines Work

Google alone handles over 3.5 billion searches per day. The search engine scours the world’s estimated 1.9B websites, returning relevant results in under 0.5 seconds. So what’s going on behind the scenes?

To return relevant results, a search engine needs to do three things:

- Create a list, or index, of pages on the web

- Access this list instantly

- Decide which pages are relevant to each search

In the world of SEO, this process is normally described as crawling and indexing.

Crawling and indexing

The web is made up of an ever-expanding mass of pages connected by links. Search engines need to find these pages, understand what’s on them, and store them in a massive data horde known as an index.

To do that, they use bots—known as spiders or crawlers—to scan the web for hosted domains. The crawlers save a list of all the servers they find and the websites hosted on them. They then systematically visit each website and “crawl” them for information, registering types of content (text, video, JavaScript, etc.), and number of pages. Finally, they use code tags like HREF and SRC to detect links to other pages, which they then add to the list of sites to crawl. That allows the spiders to weave an ever-larger web of indexed pages, hopping from page to page and adding them to the index.

Search engines store this information in huge physical databases, from which they recall data whenever anyone makes a search. Google has data centers spread across the world; their mammoth center in Pryor Creek, Oklahoma, has an estimated 980,000 square feet. This network allows Google to store billions of pages across many machines.

Search engines crawl and index continuously, keeping track of newly added pages, deleted pages, new links, and fresh content. When a person performs a search, the search engine has a fully updated index of billions of possible answers ready to deliver to the searcher.

All that’s left to do is rank these results according to their relevance and quality.

How search engines Rank relevant Results

If a search term returns hundreds of thousands of results, how does a search engine decide the best order to display them to the searcher?

Determining relevant results isn’t done by a team of humans at Search Engine HQ. Instead, engines use algorithms (mathematical equations and rules) to understand searcher intent, find relevant results, and then rank those results based on authority and popularity.

In an effort to stop black-hat SEO, search engines are famously unwilling to reveal how their ranking algorithms work. That said, search marketers know that algorithmic decisions are based on over 200 factors, including the following:

- Content Type: Searchers look for different types of content, from video and images to news. Search engines prioritize different types of content based on intent.

- Content Quality: Search engines prioritize useful and informative content. Those are subjective measures, but SEO professionals generally take this to mean content that’s thorough, original, objective, and solution-oriented.

- Content Freshness: Search engines show searchers the latest results, balanced against other ranking factors. So, out of two pieces judged to be of equal quality by the algorithm, the most recent piece will likely appear first.

- Page Popularity: Google still uses a variation of their original 1990s PageRank algorithm, which judges a page’s quality by the number of links pointing to it, and the quality of those links.

- Website Quality: Poor-quality, spammy websites are bumped down the rankings by search engines (more on that below).

- Language: Not everyone is searching in English. Search engines prioritize results in the same language as the search term.

- Location: Many searches are local (e.g., “restaurants near me”); search engines understand this and prioritize local results when appropriate.

By keeping these factors in mind, search marketers can create content that’s more likely to be found and ranked by search engines.

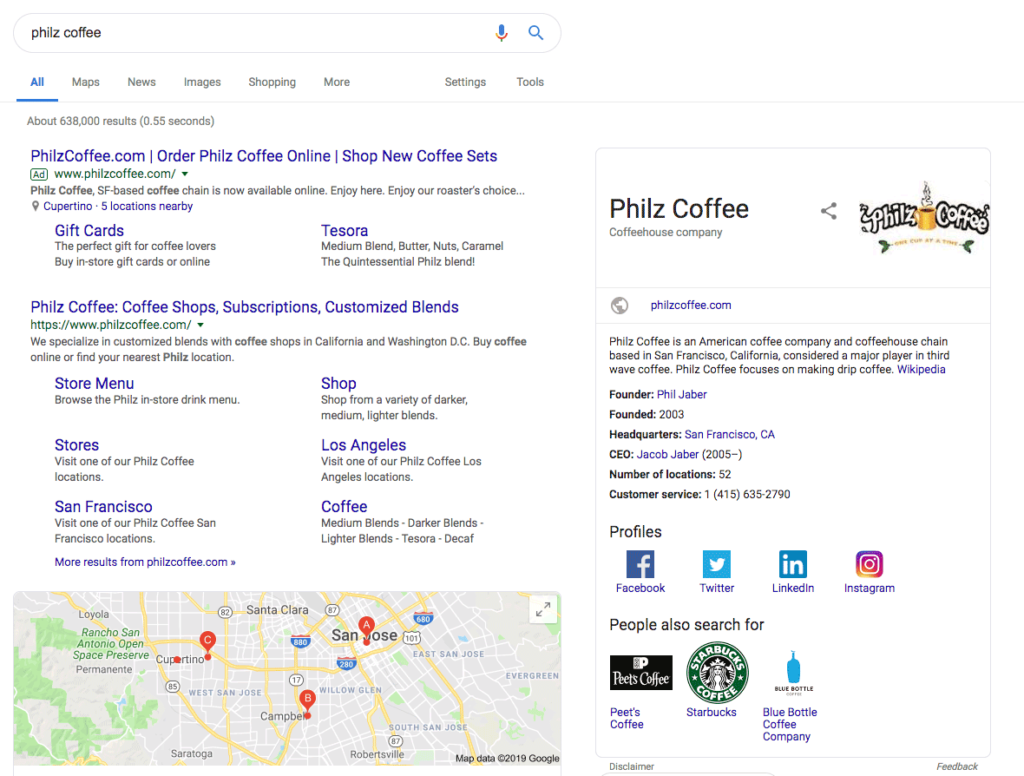

Types of Search Engine Results Page (SERP) Features

In the early days of search, results were presented as a simple list of descriptive snippets and links, like we saw in the previous image. In the last few years, these standard results have been supplemented by SERP features, enriched results that include images and supplementary information. When we search for Philz Coffee New England Patriots, for example, a feature result comes up with extra information that might be of interest to the searcher:

You can’t guarantee your site gets additional SERP features, but you can help increase your chances by:

- Creating a site structure that makes sense to both search engines and users.

- Structuring your content on page such that it is scannable for both search engines and users.

- Employing schema markup, or structured code that helps crawlers understand a site better.

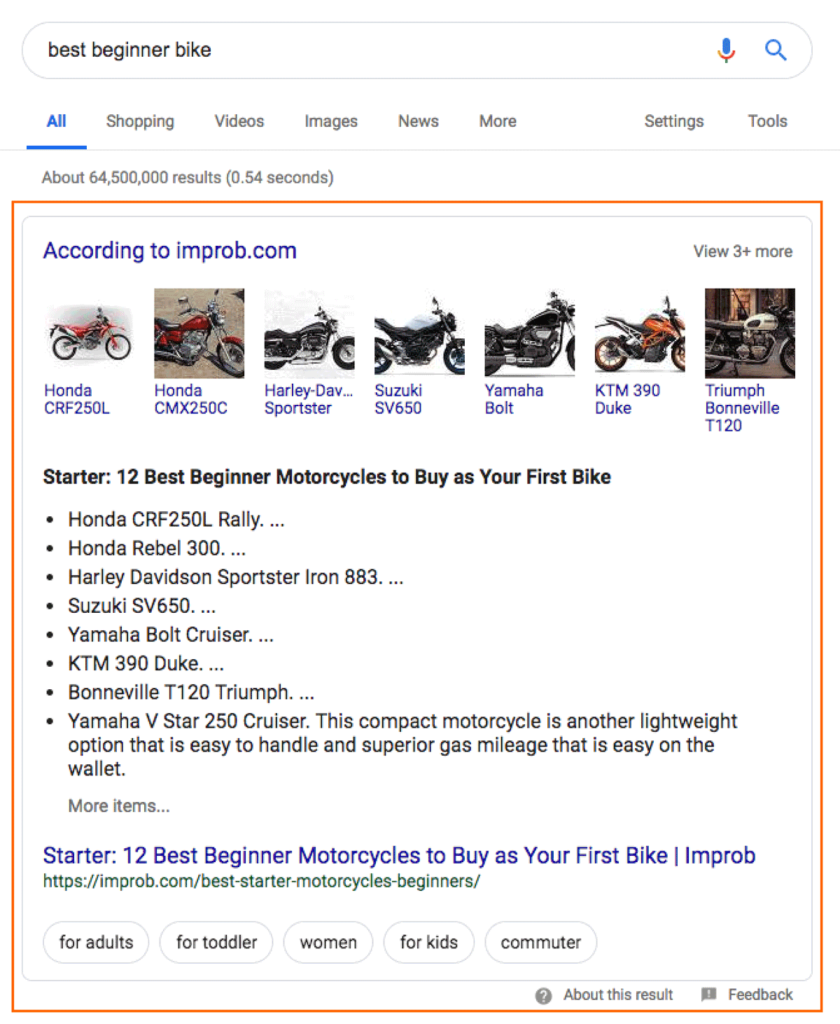

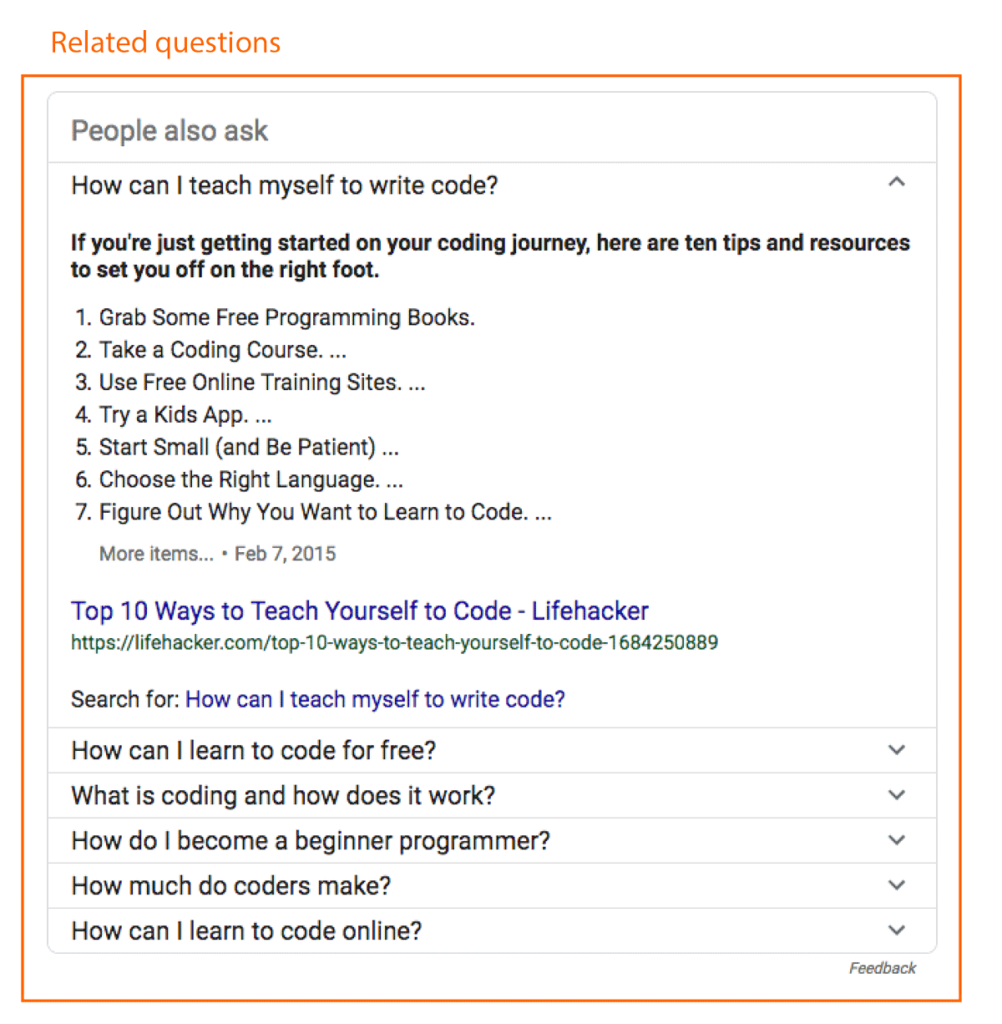

The most common organic SERP features include the following:

- Rich Snippet: Additional visual component in a traditional result (e.g. review stars for a restaurant listing).

- Featured Snippet: Highlighted block at the top of the search results page, often with a summarized answer to a search query and link to the source URL.

- People Also Ask: Also known as related questions, these are a block of expandable related questions asked by searchers.

- Knowledge Cards: Right-aligned panels showing key information about a search term. The Philz Coffee example from above shows a knowledge panel with their logo, company information, and social profiles.

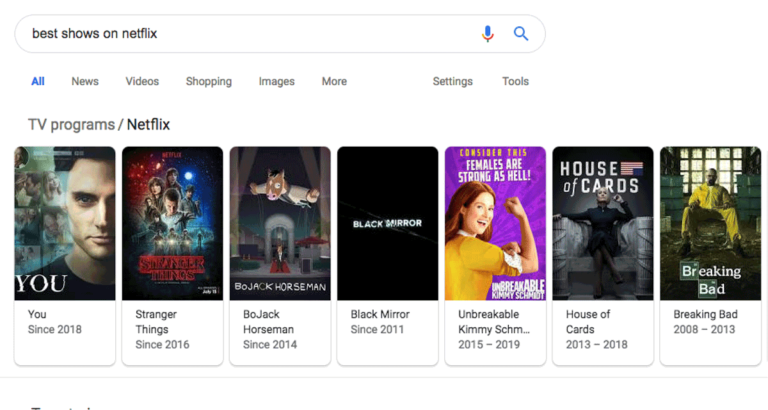

Image Packs (carousels): Horizontal row of image links, appearing for searches where visual content is useful. - Image Packs (carousels): Horizontal row of image links, appearing for searches where visual content is useful.

Instant Answers: These results pop up when Google can give a quick answer to a searcher’s query, like when you search for the weather where you are right now. Unlike featured snippets, there’s no link to any source site.

Instant Answers: These results pop up when Google can give a quick answer to a searcher’s query, like when you search for the weather where you are right now. Unlike featured snippets, there’s no link to any source site.

Takeaway for Search Marketers

Search engines prioritize returning relevant, high-quality content based on the words used by people searching for information online. They do this by indexing all the pages they can find, building algorithms around relevance, and presenting results in different ways that are most relevant to the search intent.

By understanding how search engines find and grade web pages, you’re better equipped to create websites that rank higher and get more traffic. To do that, you’ll need a strategy based on three types of SEO: technical, on page and off page.

What is Technical SEO?

Technical SEO—the art of optimizing a website for crawling and indexing—is a vital step in getting a site to rank. Build a site on shaky technical foundations and you likely won’t see results, no matter how high-quality your on-page content. That’s because websites need to be constructed in a way that lets crawlers access and “understand” the content.

Technical SEO has nothing to do with content creation, link-building strategies, or content promotion. Instead, it focuses on site architecture and infrastructure. As search engines become more “intelligent,” technical SEO best practices have to adapt and become more sophisticated to match. That said, there are some basic constants of technical SEO to take on board.

URL Hierarchy & Structure

It’s important to define a site-wide URL structure that is accessible to crawlers and useful to users. This doesn’t mean you have to map out every single page your website is ever going to have; that would be impossible. But it does mean defining a logical flow of URLs, moving from domain to category to subcategory. New pages can be slotted into that hierarchy when created.

Websites that skip this step end up with a mass of counterintuitive subdomains and “orphan pages” with no internal links. This isn’t a nightmare just for users; it also confuses crawlers and makes them more likely to abandon indexing your page.

On a granular level, SEO-friendly URLs should be structured in a way that describes the page’s content both for crawlers and for users. That means incorporating key search terms as close to the root domain as possible and keeping URL length to 60 characters, or 512 pixels. If optimized right, URLs act as a positive ranking factor for crawlers and motivate users to click through.

Page speed

The faster a web page loads, the better for search engine rankings. This is based on users’ preference for lightning-fast loading: 40% of searchers abandon a page that takes longer than three seconds to load.

Technical SEO focuses on reducing the use of elements that slow page loading. That means plug-ins, tracking codes, and widgets need to be kept to a minimum, and images/videos need to be compressed in size and weight. Online marketers and designers need to cooperate to achieve page design that contains all the necessary design features but still loads in under three seconds.

XML Sitemaps

An XML sitemap is a file that lists all the pages of a website, including blog posts. Search engines use this file when crawling a site’s content, so it’s important that the sitemap does not contain pages that you don’t want to rank in search results, like author pages.

HTTP or HTTPs?

When you surf a web page, pay online, or enter personal details in a web form, you’re sending information across the internet. In the early days, servers used a system called HTTP, Hypertext Transfer Protocol. HTTP is a fast way to send data, but it isn’t secure, because your connection to the site is not encrypted. That means third parties can access your data.

That’s why in 2014 Google announced that websites running on HTTPS—Secure Hypertext Transfer Protocol—would get a small rankings boost. HTTPS moves data between browsers in the same way as HTTP but with an added protocol on top—SSL, a Secure Sockets Layer that encrypts data and transports it safely across the web.

All this can sound overwhelming for rookie search marketers. The takeaway is that it’s better for your site to be built to run HTTPS from day one or to switch over to HTTPS.

AMP

AMP (Accelerated Mobile Pages ) is a Google-sponsored, open-source platform that allows website content to render almost instantly on mobile devices. Content that once loaded slowly on mobile—videos, ads, animations—now loads rapidly no matter what device visitors are using.

The impact on SEO is twofold. First, users abandon sites that take longer to load, as we already know, and mobile users require even faster load times. If your site is slow loading on mobile, bounce rate will increase, and that will negatively impact rankings. Second, there is evidence that search engines (at least Google) prioritize AMP-optimized results in the rankings.

Takeaway for Search Marketers

Crawlers don’t “understand” content like a human brain might; they need certain technical structures and markers to effectively rank your content. Luckily, search engines themselves provide tips on how to improve a site’s technical SEO—check out our technical SEO audit infographic here.

What is On-page SEO?

On-page SEO (also known as on-site SEO) is the process of optimizing everything that’s on a website to try to improve rankings. If technical SEO is the back end, on-page SEO is the front end. It encompasses content formatting, image optimization, basic HTML code, and internal linking practices. The good news is that on-page SEO doesn’t depend on external factors; you have 100% control over the quality of on-page SEO.

Meta Tags

Meta tags are small elements inside a web-page’s code that help define the structure of the page’s content. For example, an H1 tag alerts crawlers to the title of a blog post or web page. H2 and H3 tags indicate information hierarchy, just as subheads of various sizes would in an analog document. Crawlers then compare the text under each title tag with the words in the title to make sure content is relevant.

Good meta-tag SEO helps search engines figure out what a web-page’s content is about and when to show it in search results.

Page Titles & Meta Descriptions

Page titles aren’t read just by people; search spiders also “read” them when crawling. That means that SEO-optimized on-page titles should include important search terms and be no longer than 70 characters. Any longer than that and search engines will truncate the title in SERPs, which looks bad to searchers.

Meta descriptions—short text snippets summarizing the content of a web page—often appear below the page title in SERP results. While Google doesn’t use meta descriptions directly as a ranking factor, SEO-optimized meta descriptions attract more visits from SERP pages because the people searching will likely read them. This in turn sends search engines positive signals about the site.

Resist the temptation to “stuff” titles and descriptions with a ton of keywords. Keyword stuffing will have an actively negative effect on search rankings. Rather, let search engines know what the page is about through clarity and concision.

Image Optimization

Images also need to be readable for crawlers; if they’re not, search engines can’t pull up useful visual results. Search engine marketers use ALT text (an HTML attribute) to describe images to crawlers. Optimized ALT text contains relevant search-query terms but should make sense to human readers as well.

Outbound and Internal Links

We already know that crawlers use links to move between web pages when indexing; pages without links are inaccessible to search engines. That makes linking—both outward to high-quality sites and internally between a website’s pages—a vital part of good SEO.

Outbound links take visitors from your website to an external site. These links pass along value to the external site, because search engines understand the link to be a stamp of approval. That means that every time you link to an external site, you’re giving it the thumbs up for search engines, and providing users with a better navigating experience.

Internal linking, or building links between a website’s own pages, improves a site’s crawlability and sends signals to search engines about the most important keywords on a page. It also keeps users navigating your site longer, which search engines understand to be a positive reflection of site quality.

Takeaway for search marketers:

Focus on creating web pages with a clear hierarchy interspersed with internal and external links. If you’re not sure about the quality of your site’s on-page SEO, run it through an on-page SEO checker. The tool gives you a list of activities to improve on-page SEO, previews how pages might appear in search engine results, and identifies opportunities to improve rankings.

Related: The 25-Point SEO Checklist

What is Off-site SEO?

Off-site SEO encompasses factors that happen outside of your website but still affect your ranking. This includes things like the number of backlinks you have and the quality of the sites that link to you. Off-site SEO shows search engines that your site is valuable and authoritative and deserves to rank highly in results.

Unlike on-site SEO, off-site SEO is not 100% under your control. But you can improve a site’s off-site SEO by focusing on two things: building links and reputation.

Link-building

In off-site SEO, link-building refers to the practice of getting high-quality external sites to link back to you. SEO professionals talk about “link juice” flowing between sites; this just means that every time a reputable site links to you, search engines take that as a sign your site is also reputable and thus improves your chances of ranking higher in SERPs.

Not all backlinks are equal. Search engines calibrate the value of a link back to a site based on several factors:

- Linking site authority

- Relevance of the linked-from content

- Relevance of the backlink’s anchor text

- Number of links the backlinking site has

- Whether the link is followed or no-followed

Most search marketers actively seek links from good external sites. Figure out which sites can provide high-quality backlinks.

Once you have a list of high-quality sites to get backlinks from, you can get to work on off-page SEO techniques like guest blogging, brand-mention link acquisition, and broken-link building.

Takeaway for Search Marketers

Optimization for search engines goes beyond the purely technical, requiring creativity and relationship-building activities. It’s an ongoing process, so it pays to do a site audit every month; this helps you monitor your backlink profile, influence, and competitiveness in the SERPs.

SEO Keyword Research

Let’s go back to the beginning of the whole search process for a minute. A search starts when a user types a word or phrase into a search engine. Search engines use keywords (and subtleties like word order, spelling, and tense) to figure out searcher intent, or what the searcher is actually hoping to find.

Keyword research helps search marketers understand what users are searching, what words they’re using to do so, and which of those keywords bring traffic and business to a website.

There are three main types of keywords:

- Short-tail keywords: Searches with only one or two words, like “flights” or “digital marketing.” These keywords might have a high search value, but it’s hard to figure out what users really want from these keywords. Basing a keyword strategy entirely on short tails would lead to a higher bounce rate and less time on page for your site, pulling rankings down in the long term.

- Long-tail keywords: These have three or more words, are more specific, and are often less competitive than short tails. The phrase “what is a long-tail keyword” itself is a great example: the term has respectable search volume, and the searcher intent is very specific.

- Latent Semantic Indexing keywords: Keywords that provide contextual information to search engines. For instance, say a page ranks for an ambiguous term like “titanic.” It could be about the movie, the historical event, or even the dictionary definition of the term. Google uses latent semantic keywords to figure that out; a page about the James Cameron film could contain LSI terms like “Kate Winslet.”

An effective keyword research project and strategy combines these three types of keywords, building them into site structure and content to help the site rank in SERPs.

But how do you know which keywords are the right ones to target? Keyword research.

Basics of Keyword Research

Keyword research is a huge topic, and not one we will cover in its entirety here. Instead, we’ll outline the main steps of keyword research and provide links to fill in the gaps.

- Generate a list of potential keywords using Google Autofill and Related Searches.

- Use Googles Keyword Planning tools to find new keywords that may not have been on your radar.

- Define a list of keywords your site has a chance of ranking for, balancing highly competitive short-tail keywords and focused, low-competition long-tail keywords.

- Categorize search intent, and align different intents to different stages in the sales funnel. For example, someone searching for “buy running shoes” is further along the sales funnel than the person searching “best running shoes for women”; the content you create for each term should reflect that difference.

- Choose a selection of terms from your keyword list, and create content (for example, landing pages or blog posts) that answers searcher questions around those terms.

- That basic skeleton underlies all successful keyword strategies; within the basic outline, there’s a whole world of approaches and tactics.

Takeaway for Search Marketers

SEO is built around keywords. Systematic keyword analysis will involve looking at the keywords a site currently ranks and converts on, missed keyword opportunities, and competitor keyword analysis.

From there, the key to ranking for keywords is creating SEO-optimized content.

Related: Small Business SEO Tips for Time-Strapped Entrepreneurs

How Content Affects SEO Rankings

Content—high-quality, useful information created to attract traffic and move users along the funnel—is the cornerstone of effective SEO. Without content—whether in the form of blog posts, videos, images, product pages, etc.—websites have little chance of appearing in search results. Search engine marketers create SEO content that meets the needs of both users and crawlers.

To create content that meets searcher needs, you must understand search intent.

Search intent

Searches are based on a variety of intentions, from buying or finding a product to solving a problem or navigating to a particular website.

- Informational intent: Searches for information, rather than an intent to purchase or navigate to a specific site. 80% of web searches are thought to be informational.

- Navigational intent: Searches with the intent of going directly to a website—when you type the name of your bank into the search box instead of memorizing the login page URL, for example.

- Transactional intent: Searches with the final intent of making a purchase, signing up to a service, or downloading a file.

Search intent

Different content matches different intents. For example, a product page could meet a transactional search query, whereas a blog post meets informational intent.

Content that matches searcher intent will produce better engagement metrics, send positive signals to search engines, and rank higher over the long term.

Takeaway for Search Marketers

Content is at the heart of good search engine optimization, but to be successful, you need to be both proactive and reactive in planning and improving SEO content. Weight your content strategy toward creating content that meets 80% of informational searches around your keywords, and optimize landing pages for transactional query phrases like “buy” or “purchase.”

How Usability Affects Rankings

User experience (UX) has what’s known as a secondary impact on SERPs. That means that while UX is not one of the main factors on which search engines base rankings, it still plays a role in defining site relevance and authority.

The exact variables search engines use to judge usability are up for debate. However, a site’s engagement metrics will help search engine marketers figure out whether their pages are living up to Google’s user-centric standards. Click-through rate (CTR), session length, session frequency, and bounce rate all tell you how searchers are reacting to your site and whether they’re finding the information they need.

Marketers aiming to “make pages primarily for users, not for search engines,” as Google recommends, should start with the following:

- Format Content: Most people don’t read in-depth online. Make content easy to scan by including headings and subheadings, keeping paragraphs short, and interspersing images throughout the text.

- Avoid Duplicate Content: Repeating content in numerous locations is bad for users and makes it harder for search engines to display relevant results. Use a rel=canonical tag if you can’t avoid duplicate content.

- Simplify Navigation: UI elements such as breadcrumbs ensure that users don’t run into dead ends when navigating your site.

Positive user experiences mean site visitors are more likely to visit a page more frequently, stay longer when they do, link back to the page, and share it on their networks. All of these will impact positively on how search engines rank the site.

Takeaway for Search Marketers

Although we’ve spoken a lot about optimizing websites and content for search engines, it’s user experience that increasingly drives search rankings. Writing for real people, answering real questions, and providing pleasant online experiences sends positive signals to site visitors and crawlers, which is a win-win for you.

What Is SEO: The Takeaway

Search engine optimization is perpetually changing. Every search engine algorithm update is a new opportunity to serve up better content and drive higher-qualified traffic to a website. The tactics may change, but the mission stays the same: Create content and websites that work both for people and for crawlers.

Staying on top of SEO may be challenging, but the right tool kit, techniques, and tactics help to build an SEO strategy that brings long-term benefits.

Google and the Google logo are registered trademarks of Google LLC